Unity Apps for Apple Vision Pro

Developing apps for newly released devices is always challenging. With visionOS and Apple Vision, Apple cooperated closely with Unity to ensure that transitioning to the new platform is as smooth as possible. This includes not only new projects but also existing projects with the potential to be adapted to this platform.

Before We Got Out Hands on Vision Pro

Even though the headset was released on February 2, 2024, in the US and is expected to start selling in other parts of the world as late as Q4 2024, we had the opportunity to prepare for this much sooner.

A detailed guide for preparing Unity projects for transition to visionOS has been available since July 2023. In November 2023, Unity PolySpatial, which was only available in closed beta until then, became accessible to all Unity Pro, Industry, and Enterprise users. Unity PolySpatial, along with the visionOS device simulator in Xcode, allowed us to test how our Unity apps would work on this new platform.

These tools ensured that any Unity project required to work on visionOS in the future was set up correctly. Now that we have the physical device available in our offices, we can confirm that it made the deployment of these apps much easier.

Adapting Unity Projects to visionOS

Once accustomed to the set of rules from the initial guide, developing apps for visionOS is very comparable to developing for existing platforms, such as Meta Quest 3. With few unique challenges, of course. First, and probably most important, is the lack of native physical controllers. Second, is considering how each app will exist within the headset’s understanding of the surrounding space.

The Role of Unity PolySpatial

Unity PolySpatial is a key factor that makes development for visionOS seem so familiar. It is a collection of technologies targeted to address the challenges of developing immersive apps for either Shared Space or Immersive Space.

Content on Apple Vision Pro is rendered using Reality Kit. Since Unity materials and shaders need to be translated to this new environment, we need to make sure we use compatible materials. This is where PolySpatial comes into play. It lets us use tools that we are familiar with and takes care of the translation for us. It takes care of not just materials and shaders, but also many other Unity features such as Mesh renderers, Particle effects, Sprites, and Simulation features.

Conveniently, it also comes with a list of sample scenes that cover most of the interaction and object placement types.

Developing with Unity for visionOS

For anyone who has tried developing XR apps before, switching to visionOS does not require a steep learning curve. It’s probably the exact opposite: many tools and systems will be familiar.

One drawback we noticed was getting used to the terminology. It is not unusual for different companies to use different names for the same thing. In this case, it disrupts the consistency of terminology used for app classification across different platforms.

Apple uses its own labels for what is essentially Windowed App, Mixed Reality app and Virtual Reality app. In addition, multiple apps can run simultaneously, which adds an additional level of confusion to app classification.

Let’s clear this up with a simplified breakdown of the terminology used by Apple, followed by a classification of apps translated into the language that Unity developers are more accustomed to.

visionOS app classification:

Windowed Apps – Apps that run in visionOS’ native 2D window

Immersive Apps – Any application that takes advantage of Apple Vision Pro’s spatial computing to anchor virtual content in the real world

Shared Space – Allows multiple immersive or windowed apps to be rendered simultaneously side by side

Immersive Space – Covers mixed reality apps, or in other words, apps that let you mix virtual content with real-world objects using passthrough

Fully Immersive Space – This is essentially a Virtual Reality app that completely replaces the real world with the digital

Unity PolySpatial app mode classification

Luckily, Unity’s XR Plug-in Management takes care of this classification and lets you chose exactly what type of app you want to develop. In addition, it automatically installs additional plugins required for a given app mode.

Virtual Reality App – Fully Immersive Space

Mixed Reality App – Volume or Immersive Space

Windowed App – 2D Window

Volume Cameras

With all the terms mentioned above, the main difference we can see when developing in Unity is the volume camera setup. For Immersive apps, volume cameras are used to define whether an app runs in an immersive or shared space, or in other words, in a bounded or unbounded volume:

Bounded volume – Has dimensions and transform defined in Unity, as well as a specific real-world size. It can be repositioned in the real world by the user but cannot be scaled. Content is clamped by Reality Kit to ensure only content inside the volume is rendered.

Unbounded volume – In this mode, the volume camera renders all content and allows it to fully blend with the passthrough for a more immersive experience. There can only be one unbounded camera active at a time.

Choosing the Right Input Type for Your Vision Pro App

There are multiple input types supported by Apple Vision Pro. Since some input types only work with specific types of volumes, it is important to consider what type of input is required for a given project when deciding which volume type will be used to build the immersive app.

Look & Tap – This is the most common type of interaction with immersive apps. You can use Look&Tap to either select an object at a distance or reach out and grab objects directly.

Hand & Head Pose Tracking – This input type works with Unity’s Hands package, which provides low-level hand data. The head pose is provided through the Input System. This type of input is available only for Unbounded Volumes, in other words, not for apps running in Windowed mode or Shared Space.

Augmented Reality Data from ARKit – This input includes plane detection, world mesh, or image markers, as typically used in Augmented Reality app development for iOS. Like Hand & Head Pose Tracking, this type of input is only available for Unbounded Volumes.

Bluetooth Devices – Keyboards, controllers, and other system-supported devices can be accessed through Unity’s Input System.

Building and Deploying Apps to Apple Vision Pro

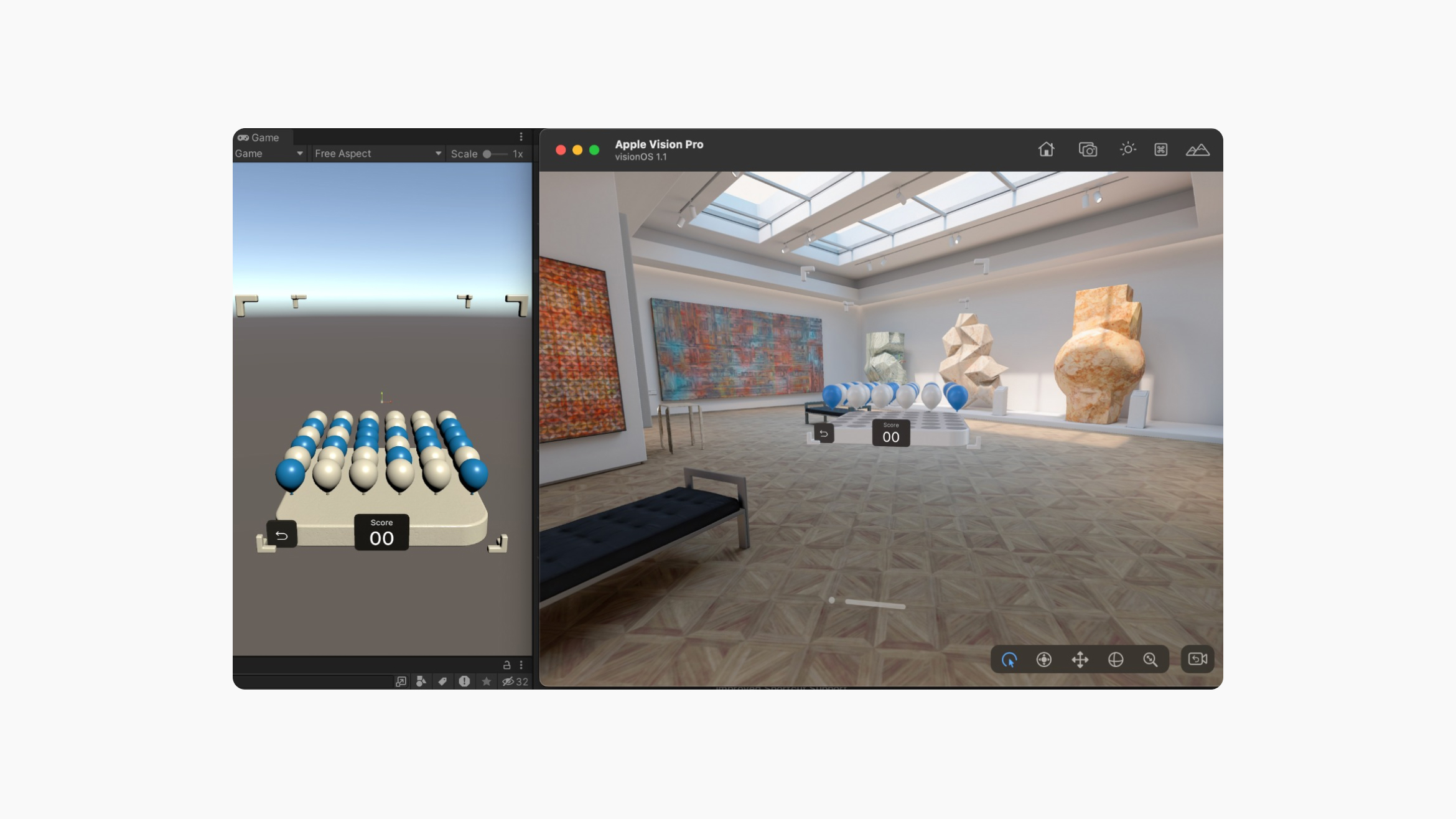

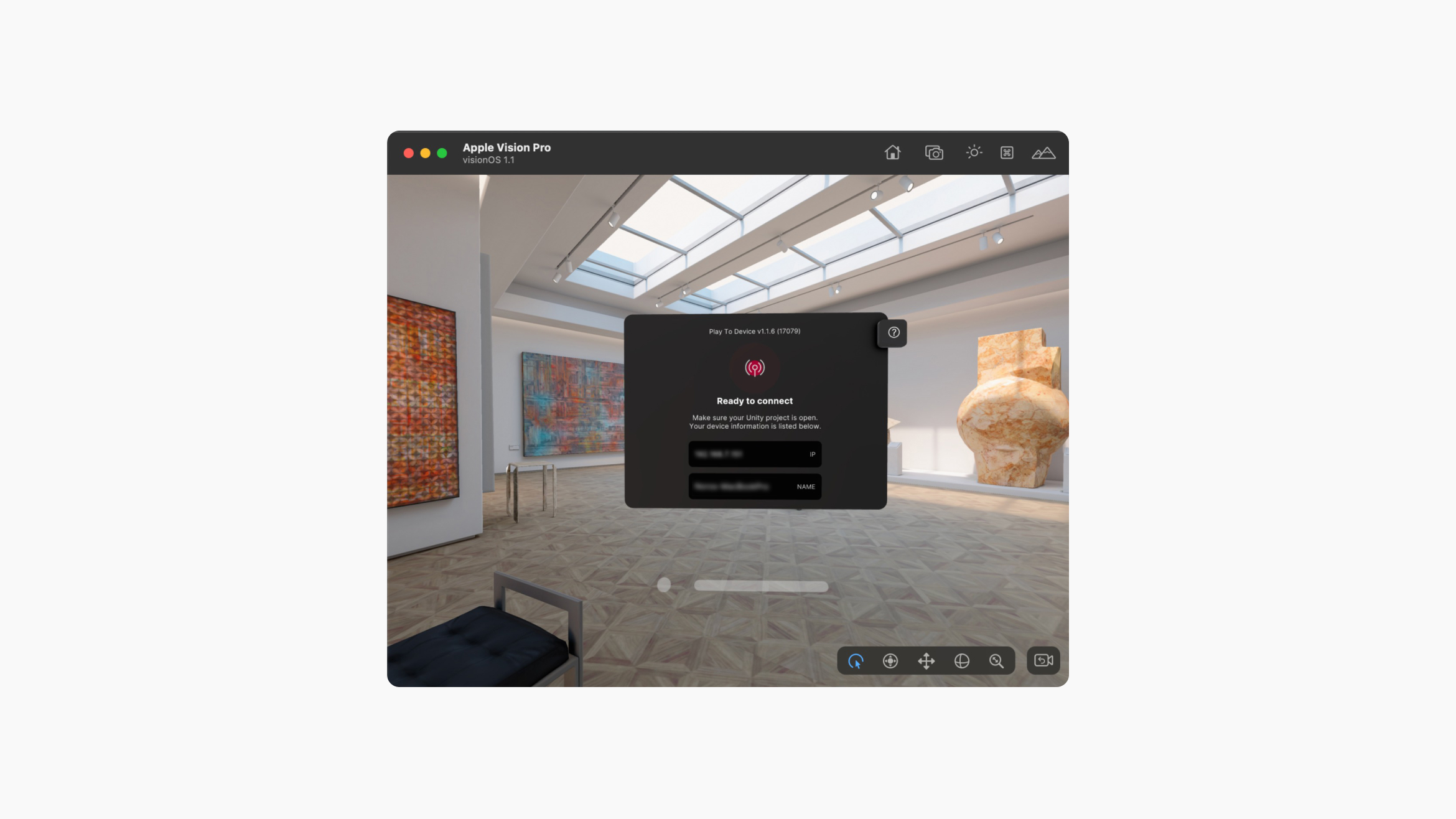

One of the most valuable features that makes developers' lives easier is the “Play to Device” feature that is included in the Unity PolySpatial package. This feature allows for building and running apps directly on both a physical device and a device simulator, and it also enables making live changes to the scene and debugging apps in the Unity editor.

This is particularly useful when developing mixed reality apps, where it is crucial to ensure that the content size, lighting, and materials are correctly calibrated and seamlessly blend with the real world.

When building to Vision Pro through Xcode, you can choose between a wired or a wireless connection. Although the wired connection is much faster and is recommended for larger projects, it requires an additional Developer Strap, which is not included with the Vision Pro as standard and must be purchased separately for $299.

Fortunately, the wireless connection can be set up easily and allows the headset to be ready for development right out of the box.

Final Thoughts: Reflecting on Apple Vision Pro

The Apple Vision Pro is a great headset with powerful hardware, which lets us consider developing extended reality experiences that were not previously possible. It is expensive and requires getting used to for anyone who tries it for the first time. But we are excited that Apple and Unity have provided us with familiar development tools and let us start working on exciting new projects much sooner than we anticipated.